A challenge of undergraduate design education is to move from an abstract idea to a tangible, evaluable 3D model. Technical demands often delay the essential processes of ideation and experimentation.

To address this, we developed a workflow called Sketch, AI+AR for first-semester students across architecture, strategic design, digital art, communication and music production programmes.

Our methodology slows down the early stages of the creative process – through mandatory hand-sketching and observational reflection – to ultimately accelerate the broader design cycle. Here is how the process unfolds.

Step 1: Don’t skip the sketch

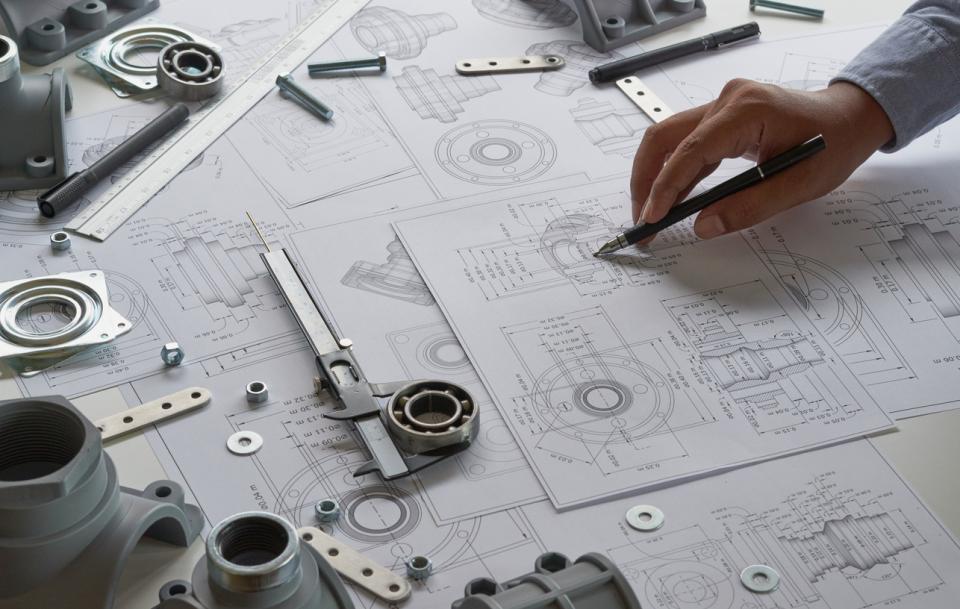

Before using generative artificial intelligence (GenAI), students must begin with hand-drawn diagrams. This critical step enforces intentionality. Students reflect on what they want to explore, be it a material, spatial emotion or response to a sustainability challenge, and photograph their sketches. This ensures that the human design intention is clearly articulated before involving machine learning.

Tip: Encourage students to annotate their sketches with specific keywords or “design intentions” (eg, “soft light”, “vertical rhythm”) to help guide their GenAI prompts. These act as conceptual anchors.

Step 2: Prompt with purpose, and iterate

Students then generate concepts using platforms such as MidJourney, Adobe Firefly or DALL-E, by writing descriptive prompts that integrate insights from their sketches and background research. Crucially, the process is recursive.

Students blend their early AI-generated visuals with photos of their initial sketches or study models, and feed these hybrid visuals back into the model, refining outputs until they align more closely with their design intent.

This multimodal prompt method fosters critical iteration, as students visually compare outputs, evaluate and fine-tune their inputs instead of settling on the first AI-generated result.

- Tips for introducing complex design software in the classroom

- How we use GenAI and AR to improve students’ design skills

- Collection: Supporting students into the creative industries

Step 3: Bring the image into the world

Next, students translate their refined 2D concepts into three-dimensional models using one or more of the following paths:

Digital modelling: Students create volumetric models, 3D representations of space with measurable depth, height and width, using tools such as SketchUp or Rhino.

Physical-to-digital: Students build physical prototypes from cardboard, clay or found materials and digitise them using LiDAR (light detection and ranging) sensors – a scanning technology, integrated in devices such as the iPad Pro, that captures precise 3D spatial information.

AI-assisted 3D generation: Platforms such as Tripo AI convert 2D images into geometric models, providing structured, spatial digital objects made of polygons and surfaces that can be explored and manipulated in a 3D environment.

Finally, students visualise their models using augmented reality (AR) on tablets or mobile phones. By viewing the object at full scale, students can better assess the form, materiality, light and spatial impact.

Pro tip: Ask students to record short AR walkthroughs with voice-over reflections to reinforce learning and provide a portable record of iteration and outcomes.

This method blends analogue and digital approaches and has shown promising results.

Dual literacy development: Students gain both AI literacy (prompt engineering, critique) and spatial literacy (scale, material, human experience).

Logic over aesthetics: AR visualisation helps expose formal inconsistencies that may look “cool” in 2D AI but fail under real-world dimensional analysis.

Scalability and engagement: We’ve adapted this methodology to high school outreach programmes and maker workshops using simplified workflows to introduce young learners to design thinking through generative tools.

Sketch, AI+AR shows that scaffolding technology use with drawing and critical observation does not replace student creativity, it improves it. By uniting hand-sketching, GenAI, and spatial validation through AR, we train students to design reflectively and iteratively with purpose.

AI use declaration: The authors confirm that the core content, original ideas, research findings, and pedagogical insights shared in this article are exclusively the product of the authors’ institutional experience with the Sketch, AI+AR project at Tecnológico de Monterrey.

A large language model (specifically, Google Gemini and ChatGPT) was used as a collaborative writing assistant. Its role was strictly limited to refining the professional tone, improving grammatical structure and ensuring narrative flow. All generated text was rigorously reviewed, edited and approved by the human authors to ensure accuracy and authenticity.

Lesly Pliego is a programme director and assistant professor; Fernanda Peña is an adjunct professor; Antonio Juárez is an assistant professor. All at the School of Architecture, Art and Design at Tecnológico de Monterrey, Mexico.

If you would like advice and insight from academics and university staff delivered direct to your inbox each week, sign up for the Campus newsletter.

comment